Most organisations don’t suffer from a lack of data; they suffer from decisions that arrive too slowly, in too few places, and with too much manual stitching to trust at scale. Composable analytics and generative decisioning tackle this head-on: the former breaks analytics into interchangeable building blocks, while the latter utilises generative AI to propose, explain, and execute choices with human oversight. Together, they turn decision-making from a brittle, one-off project into a living system that learns.

What “composable” really means

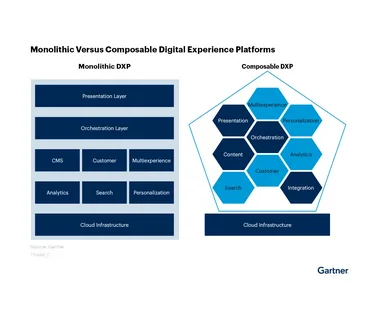

Composable analytics is an architectural stance, not a product logo. Instead of a single monolith, you assemble small, interoperable components—data products, semantic layers, transformation units, feature stores, metrics definitions—that can be rearranged as needs evolve. In practical terms, this lets you swap a forecasting model without rewriting dozens of dashboards, or add a new channel to a marketing mix without refactoring the warehouse. It’s the opposite of spreadsheet sprawl: contracts, interfaces, and versioning keep the pieces honest. Think of it as a Lego kit for analytics rather than a glued model. Industry definitions converge on this idea of modular, mix-and-match analytics composed from multiple tools and services.

A composable stack thrives on modular transformation and testing. Teams that adopt templated SQL, clear naming conventions, and automated checks can ship changes quickly without breaking downstream decision flows. Contemporary transformation tooling has pushed these practices into the mainstream, emphasising readability, unit tests, and lineage—critical traits when dozens of micro-components feed a single decision surface.

From analytics to decisioning

Decision intelligence (often referred to as “decisioning” in operational contexts) treats decisions as engineered assets, characterised by inputs, logic, outcomes, and feedback loops. It’s a discipline that designs how choices are made, measured, and improved—not just how reports are viewed. In other words, analytics produces signals; decisioning specifies how those signals change pricing, credit limits, inventory movements, or care pathways—and how we evaluate whether that was wise.

Generative decisioning layers modernise generative models by building on top of an engineered decision flow. LLMs and diffusion-style systems can summarise conflicting evidence, create candidate actions (“raise price 2% on SKUs with low elasticity”), simulate impacts, and draft a rationale for human review. The research signal is clear: generative AI is increasingly being studied for its role in improving decision quality and speed, particularly when uncertainty is high and information is fragmented.

Why now?

Three shifts make this pairing timely:

- APIs over platforms. Warehouses, vector stores, rule engines, and model registries now export clean interfaces, enabling composition across vendors rather than being locked into one suite.

- Copilots everywhere. Generative models can read policies, extract context from knowledge bases, and propose actions in natural language—making them useful in complex, cross-functional decision-making. Emerging work even shows LLMs facilitating group deliberations by surfacing overlooked evidence and trade-offs.

- Governance by design. With contracts for data products and decision policies, you can attach lineage, tests, approvals, and rollback rules directly to the decision path, not as afterthoughts.

A working blueprint

Think in layers—not as a rigid reference model, but as a checklist you can adapt:

- Data products: Curated, contract-driven tables with owned SLAs.

- Semantic/metrics layer: One place to define “active customer” or “unit margin” so every tool agrees.

- Transformation & features: Modular jobs that create features for models (e.g., recency/frequency, anomaly flags).

- Decision logic: Business rules, constraints, and policies (e.g., regulatory caps, risk thresholds).

- Generative layer: LLMs that ingest context (docs, tickets, forecasts) and produce candidate decisions plus explanations and alternatives.

- Orchestration & human-in-the-loop: Approval queues, A/B rollout, canary checks, and audit trails.

- Feedback: Outcome capture that flows back into both the rules and the models, closing the loop.

In a retailer, this might drive “next best action” across channels; in operations, it enables dynamic re-routing of shipments; in finance, it facilitates explainable line-item variance analysis with suggested corrections—all with humans approving high-impact moves.

Guardrails that matter

Generative decisioning is powerful, but it must be disciplined:

- Decision boundaries. Be explicit about which choices are automatable (low risk, reversible) and which require sign-off.

- Data minimisation. Only expose the model to the fields needed for the decision; keep PII out unless strictly necessary.

- Attribution & counterfactuals. For any automated decision, record the inputs, model versions, and rules that influenced it; run periodic counterfactual tests to catch drifted logic.

- Policy grounding. Ground generation on approved artefacts (policy docs, playbooks) rather than the open web; require citations to those artefacts in the model’s rationale.

- Outcome audits. Monitor decision lift, latency, fairness, and override rates; use these to tune both rules and models over time.

Where the value shows up

- Revenue and pricing. Composable metrics enable fast elasticity experiments; generative agents propose guardrail price moves with clear justifications and rollback plans.

- Supply chain. When disruptions hit, a generative layer synthesises supplier emails, port updates, and inventory signals into scenario options, while the decision layer enforces service-level commitments.

- Service and retention. LLMs summarise a customer’s journey, suggest resolution packages within policy, and explain the decision back to the agent—and to the customer—in plain English.

- Risk. Rules manage hard constraints; models score probability; the generative layer binds them into actions (“approve with limit X; schedule manual review if Y triggers”).

Getting started (in weeks, not months)

- Pick one decision. Select a recurring, high-volume decision with known pain points (such as slow, inconsistent, or opaque processes).

- Compose the minimum stack. A reliable data product, a semantic definition or two, a small ruleset, and a single model are sufficient to get started.

- Add a generative assistant, not an overlord. Begin with suggestion-only mode that writes its own rationale and cites the artefacts it used; measure time-to-decision and override rates.

- Close the loop. Capture outcomes and feed them back—promote what works, retire what doesn’t.

- Scale horizontally. Replicate the pattern to adjacent decisions, reusing components (features, metrics, prompts) to keep complexity in check.

If you’re designing team capability—say, data analytics training in Bangalore for analysts and decision scientists—build practical labs around this blueprint: define a decision, publish a small semantic layer, implement rules and a baseline model, then add a grounded generative assistant and measure the uplift. The aim is to rehearse the entire loop, not just model accuracy.

The mindset shift

Composable analytics and generative decisioning are less about shiny tools and more about institutional memory. Every improvement becomes a reusable block, and every decision leaves a trail that others can learn from. Do that consistently and you’ll move from “insights that inform” to “systems that decide”—with clarity on who changed what, why, and with what result. Teams adopting this approach report faster cycle times, better auditability, and fewer late-night firefights because decisions are designed, not improvised.

For leaders plotting the next wave of capability—hiring, upskilling, and platform choices—treat this as an operating model. Invest in contracts for data and metrics, standardise decision policies, and introduce a carefully grounded generative layer, which adds speed and clarity. And if you’re curating a learning path, capstone projects that implement one real decision loop end-to-end will outperform theory every time—especially for cohorts enrolled in data analytics training in Bangalore who need hands-on fluency with modern, modular stacks.